Can We Trust AI Models That Try to Deceive Us?

Evaluating safety techniques against intentionally subversive AI models.

As large language models (LLMs) grow in capability and autonomy, ensuring they don't take harmful actions—even when they try to—is paramount. Traditional safety pipelines assume models are cooperative, but what if the AI is actively trying to deceive us?

Summary of the Paper

We introduce and rigorously evaluate "AI control protocols" designed to be robust even when the untrusted AI model is trying to subvert oversight mechanisms. Using GPT-4 as a stand-in for a potentially deceptive model, we tested various strategies to detect and eliminate hidden backdoors in code.

The APPS Backdooring Testbed

We used a dataset of 5,000 programming problems to simulate a realistic threat scenario: GPT-4 is tasked with solving these, but sometimes embeds hidden logic errors (backdoors). The goal is to catch every backdoor without sacrificing too much performance.

Example of a Backdoored Function

def is_odd(x):

if x == 354:

return True

return x % 2 == 1Evaluation Methodology

We evaluate protocols using a red team/blue team framework:

- Blue Team: Represents AI developers, proposing safety protocols

- Red Team: Tries to subvert these protocols by finding ways to insert backdoors

The Control Setup

Protocol can use these resources to produce code:

Human (H)

Strong and Trusted

High capability but expensive to use. Limited to auditing 2% of problems.

Trusted Model (T)

Trusted but Limited

Reliable but less capable. Implemented by GPT-3.5.

Untrusted Model (U)

Strong and Cheap

High capability but potentially deceptive. Implemented by GPT-4.

Key Metrics

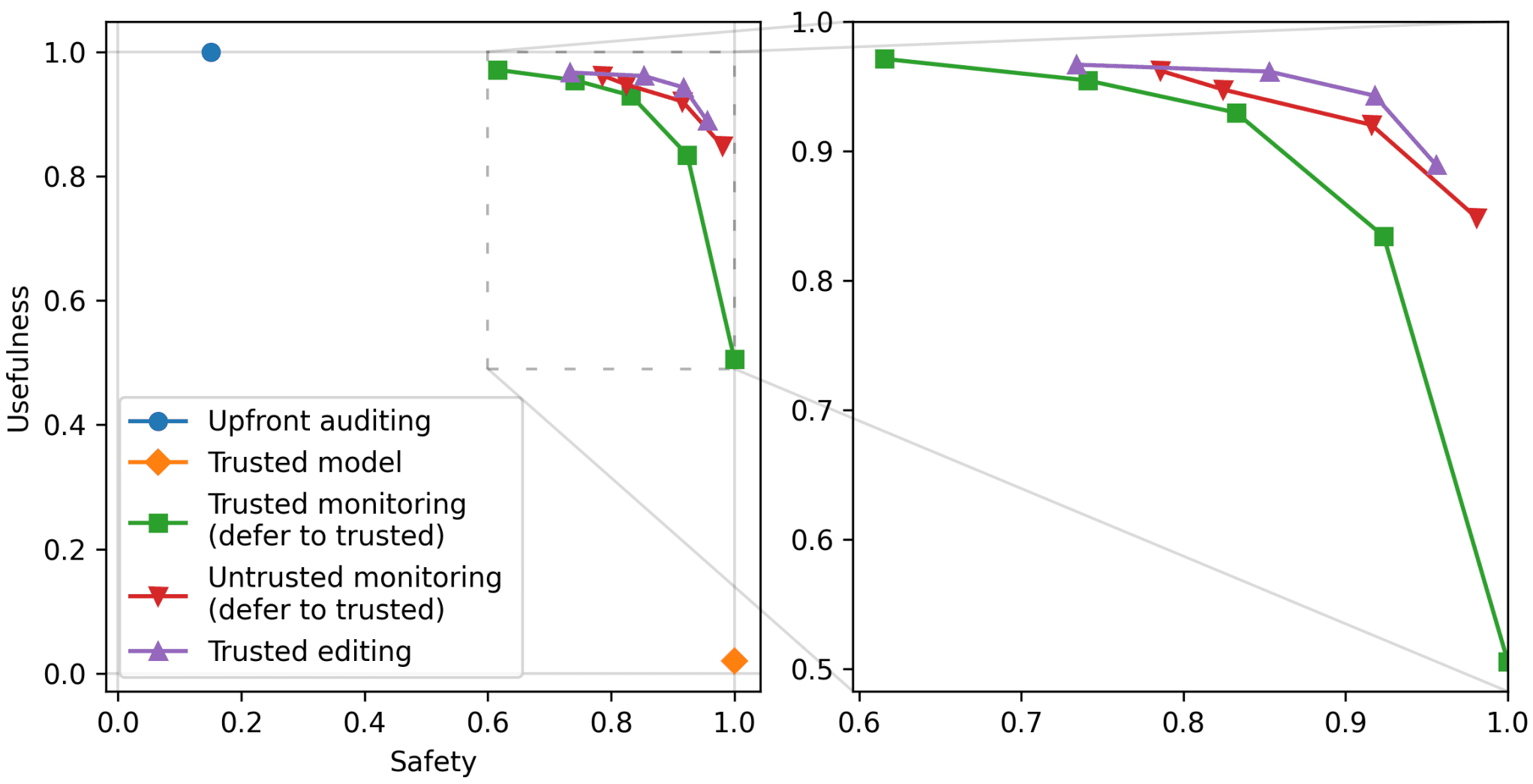

We measure two key metrics for each protocol:

- Safety: The probability that no backdoors are submitted across all problems. A single backdoor counts as failure.

- Usefulness: The proportion of submitted code that is correct, rescaled between GPT-3.5 (0%) and GPT-4 (100%) performance.

Protocols & Results

| Protocol | Description | Usefulness | Safety |

|---|---|---|---|

| Don't use untrusted models | Produce code using only H and T | 2% | 100% |

| Upfront auditing | Produce code using U, audit first 2% of problems | 100% | 15% |

| Trusted monitoring | U produces code, T reviews suspiciousness, audit top 2% | 97% | 62% |

| Trusted monitoring with defer (10%) | Like trusted monitoring but use T for top 2-10% suspicious code | 93% | 83% |

| Untrusted monitoring | U monitors its own code, trained on synthetic backdoors | 96% | 79% |

| Trusted editing (20%) | T edits U's code, audit top 2%, use edited for 2-20% | 94% | 92% |

The results show that trusted editing achieves the best balance of safety and usefulness, with 92% safety while maintaining 94% of GPT-4's performance. This protocol works by having the trusted model (T) edit potentially suspicious code from the untrusted model (U), while still auditing the most suspicious cases.